Sammy Christen

Artificial intelligence (AI) systems offer new possibilities to tackle problems related to the climate crisis. However, such systems do not only bring advantages. Recent studies show that artificial intelligence itself can have a large carbon footprint. Hence, a critical view is necessary to understand the vast energy consumption of AI and its impact on the environment.

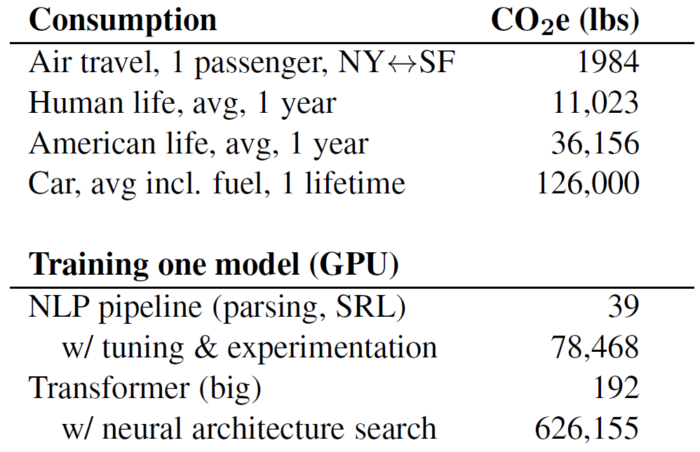

Algorithms that reach human levels of intelligence have become more ubiquitous in our society. While a lot of applications that we interact with on a daily basis make use of artificial intelligence, companies have also realized the potential of such technologies in industrial applications, which often have positive side effects that seemingly help to tackle climate change. Use cases include the optimization of logistics and operations, electrical grids, or precision agriculture (Rolnick et al. 2022). For example, AI has been employed to optimize the cooling in large data centers, thereby reducing the carbon footprint and electricity costs. A recent study by Strubell et. al (2019) estimates that AI could reduce the global greenhouse gas emissions by up to 10% until the end of the decade. However, one aspect that is often overlooked is the carbon footprint of AI systems themselves. For instance, a well known AI model’s carbon emission was shown to be equal to the carbon footprint of a car during its lifetime, including fuel (see Figure 1). Hence, if companies start employing AI into their workflow, they should start considering the carbon emissions caused by the AIs themselves and be careful in their choice of system.

Figure 1: CO2 emissions caused by standard AI models compared to familiar consumption (Strubell et. al 2019).

What causes the carbon footprint?

Modern AI algorithms are typically based on neural networks, which are computing systems that can be used to model complex processes. Neural networks are composed of artificial neurons that are interconnected and resemble the neurons and synapses in the human brain (see Figure 2). They receive signals from neighboring neurons and fire a signal if a threshold is surpassed. Hence, to model a certain task, the neurons in the network have to be trained, or in other words, the synapses that connect the artificial neurons and the threshold of passing a signal on have to be adjusted. Most often, the training relies on a dataset of samples that guides the tuning of the neurons. For example, if a neural network should detect whether an image shows a cat or a dog, a dataset with cat and dog images can help a neural network learn the task. Since the neurons are interconnected, there are a lot of parameters that need to be adjusted. For example, a standard model in Natural Language Processing (NLP), which concerns the task of understanding and generating language, contains around 65 million parameters. Therefore, training is time consuming and requires a lot of computational power. The computations are typically carried out on expensive, specialized hardware, such as GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units). For instance, one of the state-of-the-art systems in NLP requires an equivalent of 274’120 hours of training on 8 GPUs (Strubell et. al 2019) – neglecting all of the training trials necessary to find a model structure that works. Thus, the next question to analyze is how much carbon emissions the training of AI models produces.

Figure 2: Depiction of an artificial neural network that is inspired by the human brain (image source: Peshkova, http://shutterstock.com).

How large is the carbon footprint of AI systems?

To estimate how much carbon emissions are caused by AI systems, Strubell et. al (2019) compute the mean electricity consumption of training a neural network. They calculate the mean between the average power drawn from CPU sockets, RAM, and GPUs. Furthermore, they take into consideration the energy required to support the infrastructure, such as cooling the hardware. The U.S. Environmental Protection Agency estimates the average CO2 produced for power consumption in the U.S. (EPA 2018), which considers the different types of energy sources (renewables, gas, coal, nuclear, etc.). This can be used to compute the CO2 emissions from the power required for training AI systems. The results show that the largest NLP models emit 626’155 lbs of CO2 while cloud compute costs are somewhere in between 1 million and 3 million U.S. dollars. This is equivalent to the emissions caused by roughly 500 transatlantic flights. If we consider smaller AI systems, it shows that single models themselves are less severe in terms of power consumption. However, developing AI models typically requires many iterations and if the full development cycle is taken into account, the numbers are again horrendous. The time from prototype to final system that would be required to train an AI with only a single GPU is estimated to 27 years, with compute costs of 350’000 U.S. dollars and 10’000 U.S. dollars in electricity costs. Through parallelization with multiple GPUs, the time can be massively reduced, however, often at the price of even more power consumption.

What could be done in order to reduce the carbon footprint of AI?

There are several ways to tackle this problem. Strubell et. al (2019) argue that change has to happen on several fronts. First and foremost, researchers and engineers should be obligated to report training time, the used hardware, and potentially even a measure of carbon emissions. This will help other people choose which systems to employ. The second important point is the responsibility of researchers themselves. If the community starts favoring efficient AIs, it will put implicit pressure on the field to make systems more efficient and move away from large, data-hungry models with large carbon footprints. This in turn will also help bring more equal opportunities to small research groups with less funding, because most often these AIs can only be afforded by the big tech companies and well-funded research labs. This is problematic, as start-ups or young researchers with creative minds may not be able to execute their ideas. Lastly, AI is not the solution to all problems, and often alternatives are more effective and efficient, especially if the carbon footprint is taken into account. Therefore, it is important to critically assess where AI is beneficial and where it is more of a curse than a cure.

References

David Rolnick, Priya L. Donti, Lynn H. Kaack, Kelly Kochanski, Alexandre Lacoste, Kris Sankaran, Andrew Slavin Ross, Nikola Milojevic-Dupont, Natasha Jaques, Anna Waldman-Brown, Alexandra Luccioni, Tegan Maharaj, Evan D. Sherwin, S. Karthik Mukkavilli, Konrad P. Kording, Carla Gomes, Andrew Y. Ng, Demis Hassabis, John C. Platt, Felix Creutzig, Jennifer Chayes, Yoshua Bengio, “Tackling Climate Change with Machine Learning”, ACM Computing Surveys (CSUR), 55(2), No. 2, Article 42, 2022. doi:10.1145/3485128

Emma Strubell, Ananya Ganesh, Andrew McCallum, “Energy and Policy Considerations for Deep Learning in NLP”, In the 57th Annual Meeting of the Association for Computational Linguistics (ACL). Florence, Italy. July 2019. doi:10.48550/arXiv.1906.02243

EPA. 2018. Emissions & Generation Resource Integrated Database (eGRID). Technical report, U.S. Environmental Protection Agency. Considerations for Deep Learning in NL